this post was submitted on 09 Jun 2024

63 points (100.0% liked)

TechTakes

2082 readers

55 users here now

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

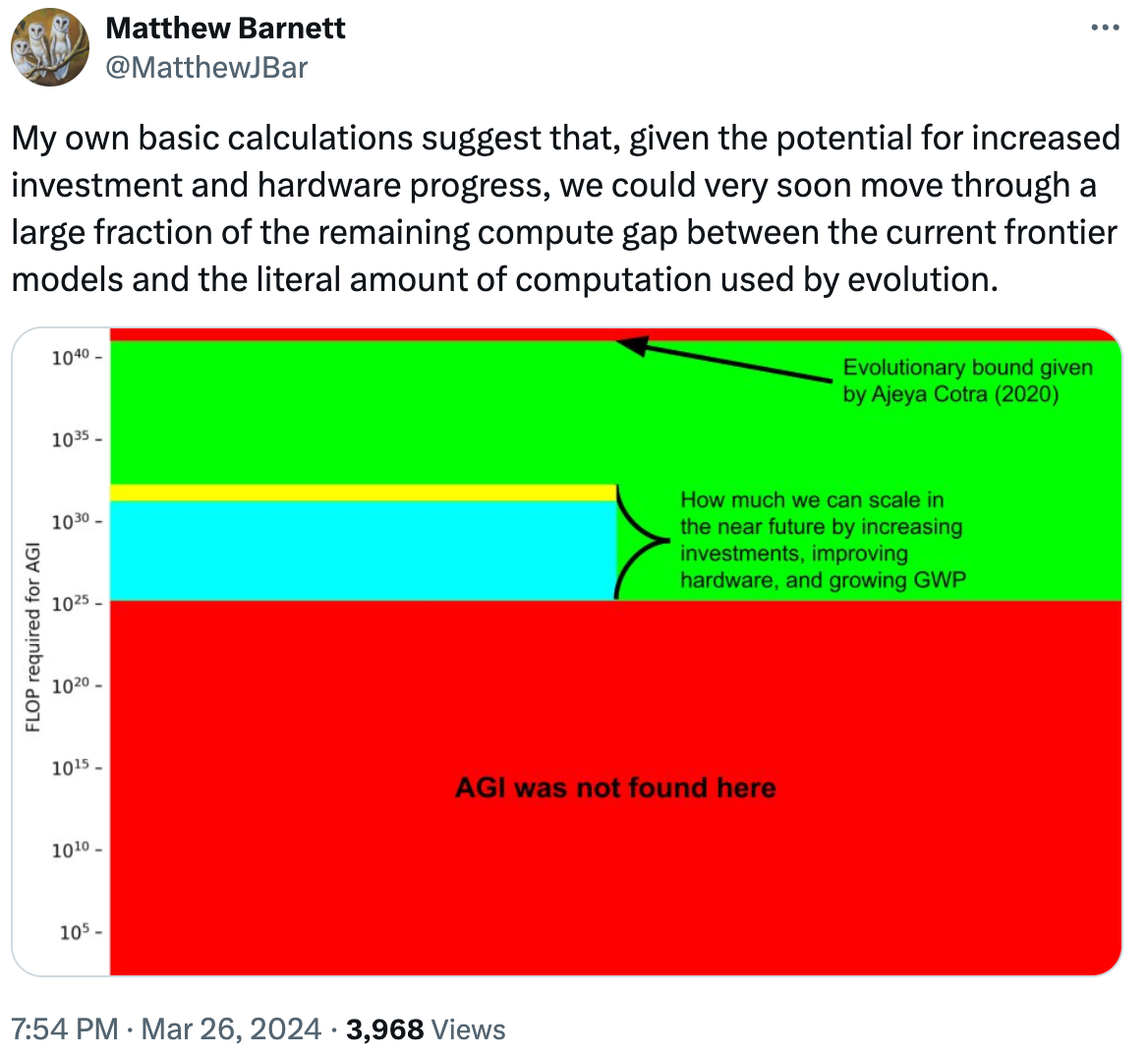

Yes, we know (there are papers about it) that for LLMs every increase of capabilities we need exponentially more data to train it. But don't worry, we only consumed half the worlds data to train LLMs, still a lot of places to go ;).