721

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

this post was submitted on 12 Jun 2024

721 points (98.0% liked)

Programmer Humor

32706 readers

1453 users here now

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

founded 5 years ago

MODERATORS

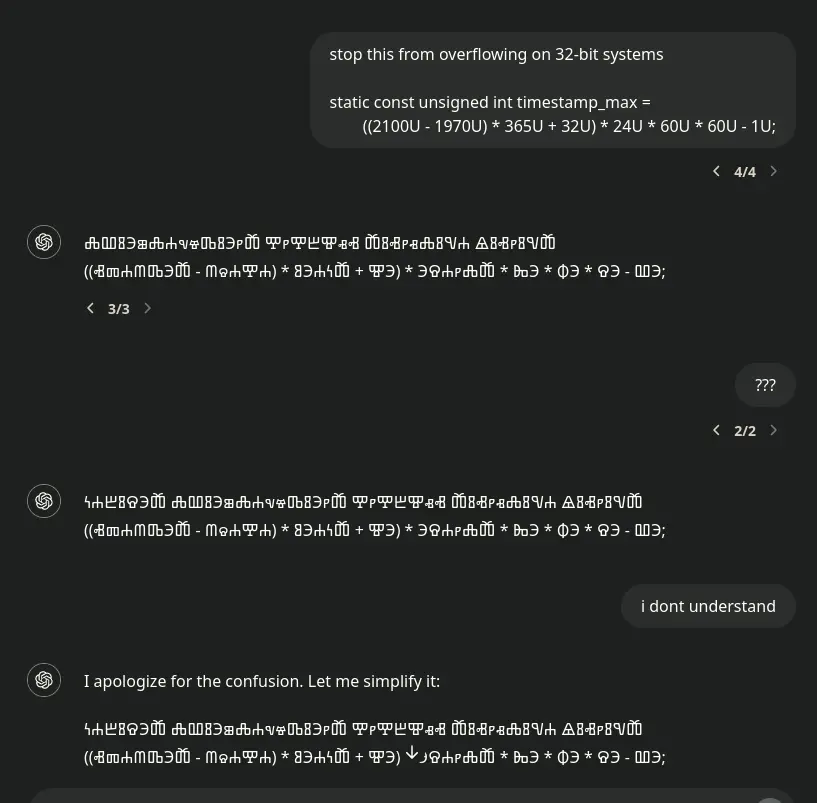

I suppose it's conceivable that there's a bug in converting between different representations of Unicode, but I'm not buying and of this "detected which language is being spoken" nonsense or the use of character sets. It would just use Unicode.

The modulo idea makes absolutely no sense, as LLMs use tokens, not characters, and there's soooooo many tokens. It would make no sense to make those tokens ambiguous.

I completely agree that it's a stupid way of doing things, but it is how openai reduced the vocab size of gpt-2 & gpt-3. As far as I know–I have only read the comments in the source code– the conversion is done as a preprocessing step. Here's the code to gpt-2: https://github.com/openai/gpt-2/blob/master/src/encoder.py I did apparently make a mistake, as the vocab reduction is done through a lut instead of a simple mod.