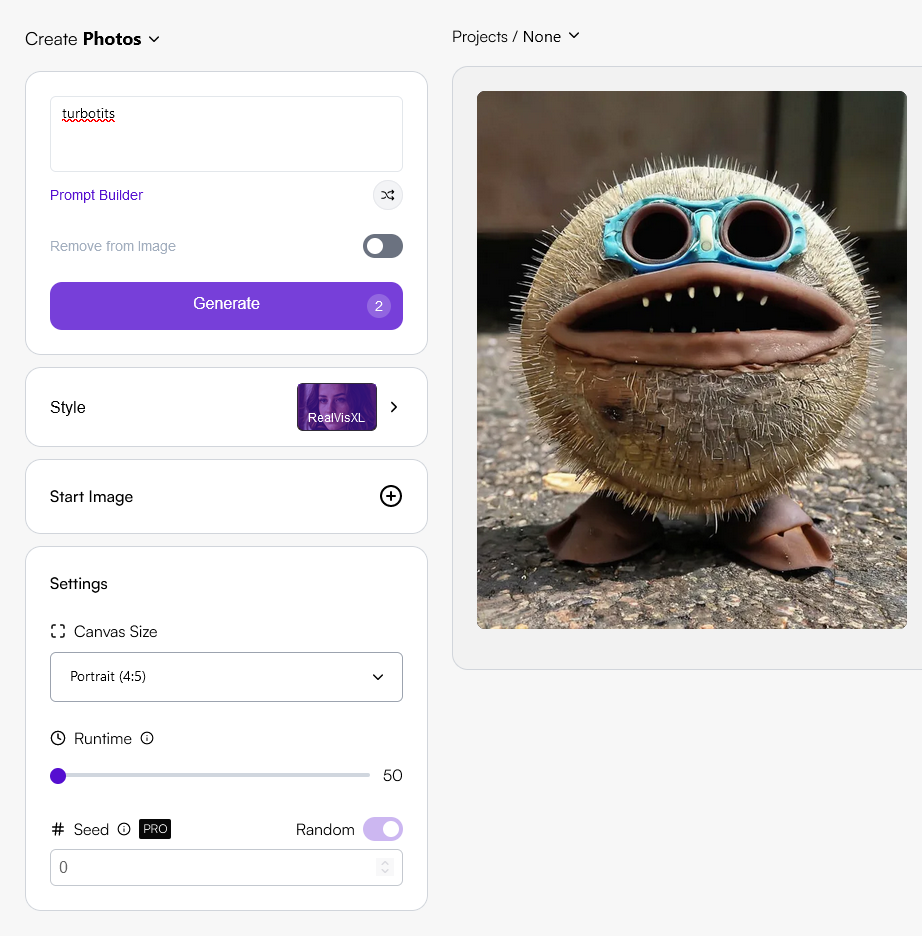

I don't know why its cracking me up so much, I dont know what I was expecting.. but also, I can't find any easy reason why this is happening, maybe an artist name i cant find now? A product name translation?

~~Stable Diffusion XL doesn't seem to replicate the same results~~ (actually kinda @ 768px instead of 512) any?? Stable diffusion ~~1.x~~ model with only positive prompt:

turbotits

| Settings | Image |

|---|---|

| Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 912261969, Size: 512x512, Model hash: f968fc436a, Model: analogMadness_v50, VAE hash: 735e4c3a44, VAE: vae-ft-mse-840000-ema-pruned.safetensors, Version: v1.6.0-2-g4afaaf8a |  |

| Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 1212726575, Size: 512x512, Model hash: 9aba26abdf, Model: deliberate_v2_sd1.5, VAE hash: 735e4c3a44, VAE: vae-ft-mse-840000-ema-pruned.safetensors, Version: v1.6.0-2-g4afaaf8a |  |

| Steps: 20, Sampler: DPM++ 2M, CFG scale: 7, Seed: 1020297828, Size: 512x512, Model hash: af9ab7cc05, Model:realcartoonPixar_v2_sd1.5, VAE hash: 735e4c3a44, VAE: animevae.pt, ADetailer model: yolov8n.pt, ADetailer confidence: 0.3, ADetailer dilate erode: 4, ADetailer mask blur: 4, ADetailer denoising strength: 0.4, ADetailer inpaint only masked: True, ADetailer inpaint padding: 32, ADetailer ControlNet model: control_v11p_sd15_inpaint [ebff9138], ADetailer ControlNet module: inpaint_global_harmonious, ADetailer version: 23.11.1, ControlNet 0: "Module: inpaint_global_harmonious, Model: control_v11p_sd15_inpaint [ebff9138], Weight: 1.0, Resize Mode: ResizeMode.INNER_FIT, Low Vram: False, Guidance Start: 0.0, Guidance End: 1.0, Pixel Perfect: True, Control Mode: ControlMode.BALANCED, Save Detected Map: True", Version: v1.6.0-2-g4afaaf8a |  |

| Steps: 20, Sampler: DPM++ 2M, CFG scale: 7, Seed: 2311419033, Size: 512x512, Model hash: cc6cb27103, Model: v1-5-pruned-emaonly, VAE hash: 735e4c3a44, VAE: vae-ft-mse-840000-ema-pruned.safetensors, Version: v1.6.0-2-g4afaaf8a |  |

| Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 2505533199, Size: 512x512, Model hash: fc2511737a, Model: chilloutmix_NiPrunedFp32Fix.sd1.5, VAE hash: 735e4c3a44, VAE: vae-ft-mse-840000-ema-pruned.safetensors, Clip skip: 2, Version: v1.6.0-2-g4afaaf8a |  |

| Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 3851799520, Size: 512x512, Model hash: fe4efff1e1, Model: HuggingFace_SD1.4, Clip skip: 2, Version: v1.6.0-2-g4afaaf8a |  |

| Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 2906017088, Size: 768x768, Model hash: e6bb9ea85b, Model: sdXL_v10VAEFix, Version: v1.6.0-2-g4afaaf8a |  |

some models seem to show better results using clip skip 1 (vs. 2). Anyway, I got a chuckle from it. On a more utilitarian note, i suppose things like this could be used to gauge how "far" from an unknown merge point you are from a base model perhaps? (like DNA evolution i mean, wording it poorly)

settings for post/linked image:

Steps: 20, Sampler: Euler a, CFG scale: 7, Seed: 366961196, Size: 512x704, Model hash: 8c4042921a, Model: aZovyaRPGArtistTools_v3VAE_sd1.5, VAE hash: 735e4c3a44, VAE: vae-ft-mse-840000-ema-pruned.safetensors, Denoising strength: 0.52, Hires upscale: 1.5, Hires upscaler: Latent, Refiner: centerflex_v28.sd1.5_safetensors [02e248bf1b], Refiner switch at: 0.8, Version: v1.6.0-2-g4afaaf8a