this post was submitted on 25 Nov 2025

155 points (93.3% liked)

memes

377 readers

95 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads

No advertisements or spam. This is an instance rule and the only way to live.

founded 5 months ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

From wikipedia:

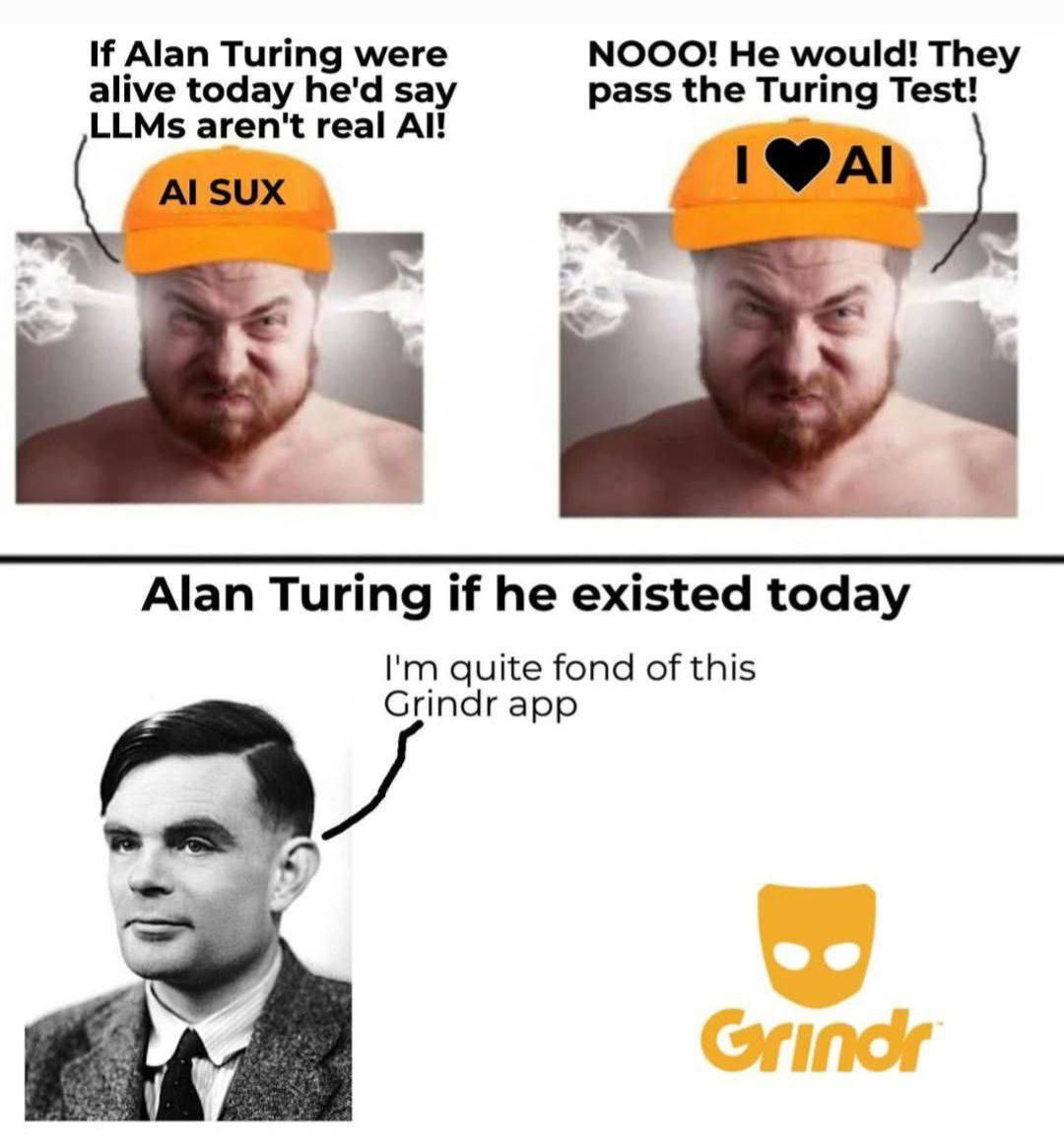

This isn't as hard a test as the one you're describing. There's research showing LLMs pass very similar tests:

That's not quite the same thing as LLMs being so good at imitating humans that a trained expert has no possible edge for telling the difference, but it is a major milestone, and I think it's technically accurate to say "AI has passed the Turing Test" at this point.

There are also thoretically infinite Turing tests. Some of them don't involve talking at all.

Alan designed a variety of tests to evaluate different and changing measures of intelligence.

We should assume he would have kept making new tests that address the advancements in technology and that the tests would usually be on a case by case basis depending on how the tech was implemented and what would be the best methods for testing it.

Humans not passing mean machine won the test.

The goal of the turing test is for a human to differentiate between human and AI. You can't be "too incompetent at being human", you can be stupid, but that doesn't make you less human.

Moving both human and AI into AI category = the categorization is broken, AI is indistinguishable from a human.

Technically, it involves three people. The interrogator is supposed to pick which unseen participant is human. (Originally proposed as, picking which participant is a woman. Chatbots being extremely not invented yet.) If people can't tell the difference - there is no difference.

LLMs definitely are not there. I doubt they ever will be. They're the wrong shape of network. I object when people say there's nothing like cognition going on inside, but 'what's the next word' is estimating the wrong function, for a machine to be smart enough to notice when it's wrong.

To be fair some humans are utter idiots…