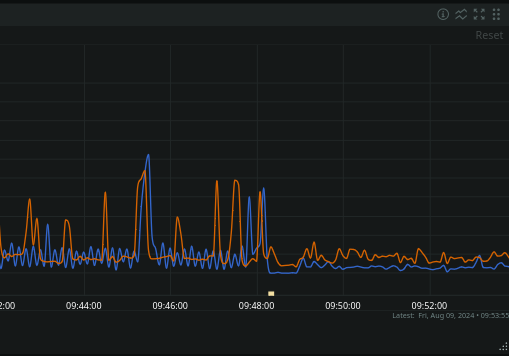

We enabled the CloudFlare AI bots and Crawlers mode around 0:00 UTC (20/Sept).

This was because we had a huge number of AI scrapers that were attempting to scan the whole lemmyverse.

It successfully blocked them... While also blocking federation 😴

I've disabled the block. Within the next hour we should see federation traffic come through.

Sorry for the unfortunate delay in new posts!

Tiff

This is sso support as the client. So you could use any backend that supports the oauth backend (I assume, didn't look at it yet).

So you could use a forgejo instance, immediately making your git hosting instance a social platform, if you wanted.

Or use something as self hostable like hydra.

Or you can use the social platforms that already exist such as Google or Microsoft. Allowing faster onboarding to joining the fediverse. While allowing the issues that come with user creation to be passed onto a bigger player who already does verification. All of these features are up for your instance to decide on.

The best part, if you don't agree with what your instance decides on, you can migrate to one that has a policy that coincides with your values.

Hope that gives you an idea behind why this feature is warranted.