LemmyWorld -> Reddthat

What if I told you the problems LW -> Reddthat have is due to being geographically distant on a scale of 14000km?

Problem: Activities are sequential but requires external data to be validated/queried that doesn't come with the request. Server B -> A, says here is an activity. In that request can be a like/comment/new post. An example of a new post would mean that Server A, to show the post metadata (such as subtitle, or image) queries the new post.

Every one of these outbound requests that the receiving server does are:

- Sequential, (every request must happen in order: 1,2,3,4...

- Is blocking. Server B which sent a message to server A, must wait for Server A to say "I'm Finished" before sending the next item in queue.

- Are inherently subsequent to network latency (20ms to 600ms)

- Australia to NL is 278ms

- NL to LA is 145ms

- I picked NL because it is geographically, and literally, on the other side of the world from Australia. This is (one of) if not the longest route between two lemmy servers.

Actual Problem

So every activity that results in a remote fetch delays activities. If the total activities that results in more than 1 per 0.6s, servers physically cannot and will never be able to catch up. As such our decentralised solution to a problem requires a low-latency solution. Without intervention this will evidently ensure that every server will need to exist in only one region. EU or NA or APAC (etc.) (or nothing will exist in APAC, and it will make me sad) To combat this solution we need to streamline activities and how lemmy handles them.

A Possible Solution?

Batching, parallel sending, &/or moving all outbound connections to not be blocking items. Any solution here results in a big enough change to the Lemmy application in a deep level. Whatever happens, I doubt a fix will come super fast

Relevant traces to show network related issues, for those that are interested

Trace 1:

Lemmy has to verify a user (is valid?). So it connects to a their server for information. AU -> X (0.6) + time for server to respond = 2.28s but that is all that happened.

- 2.28s receive:verify:verify_person_in_community: activitypub_federation::fetch: Fetching remote object http://server-c/u/user

- request completes and closed connection

Trace 2:

Similar to the previous trace, but after it verfied the user, it then had to do another from_json request to the instance itself. (No caching here?) As you can see 0.74 ends up being the server on the other end responding in a super fast fashion (0.14s) but the handshake + travel time eats up the rest.

- 2.58s receive:verify:verify_person_in_community: activitypub_federation::fetch: Fetching remote object http://server-b/u/user

- 0.74s receive:verify:verify_person_in_community:from_json: activitypub_federation::fetch: Fetching remote object http://server-b/

- request continues

Trace 3:

Fetching external content. I've seen external servers take upwards of 10 seconds to report data, especially because whenever a fediverse link is shared, every server refreshes it's own data. As such you basically create a mini-dos when you post something.

- inside a request already

- 4.27s receive:receive:from_json:fetch_site_data:fetch_site_metadata: lemmy_api_common::request: Fetching site metadata for url: https://example-tech-news-site/bitcoin-is-crashing-sell-sell-sell-yes-im-making-a-joke-here-but-its-still-a-serious-issue-lemmy-that-is-not-bitcoin

Trace 4:

Sometimes a lemmy server takes a while to respond for comments.

- 1.70s receive:community: activitypub_federation::fetch: Fetching remote object http://server-g/comment/09988776

Notes:

[1] - Metrics were gathered by using https://github.com/LemmyNet/lemmy/compare/main...sunaurus:lemmy:extra_logging patch. and getting the data between two logging events. These numbers may be off by 0.01 as I rounded them for brevity sake.

Relevant Pictures!

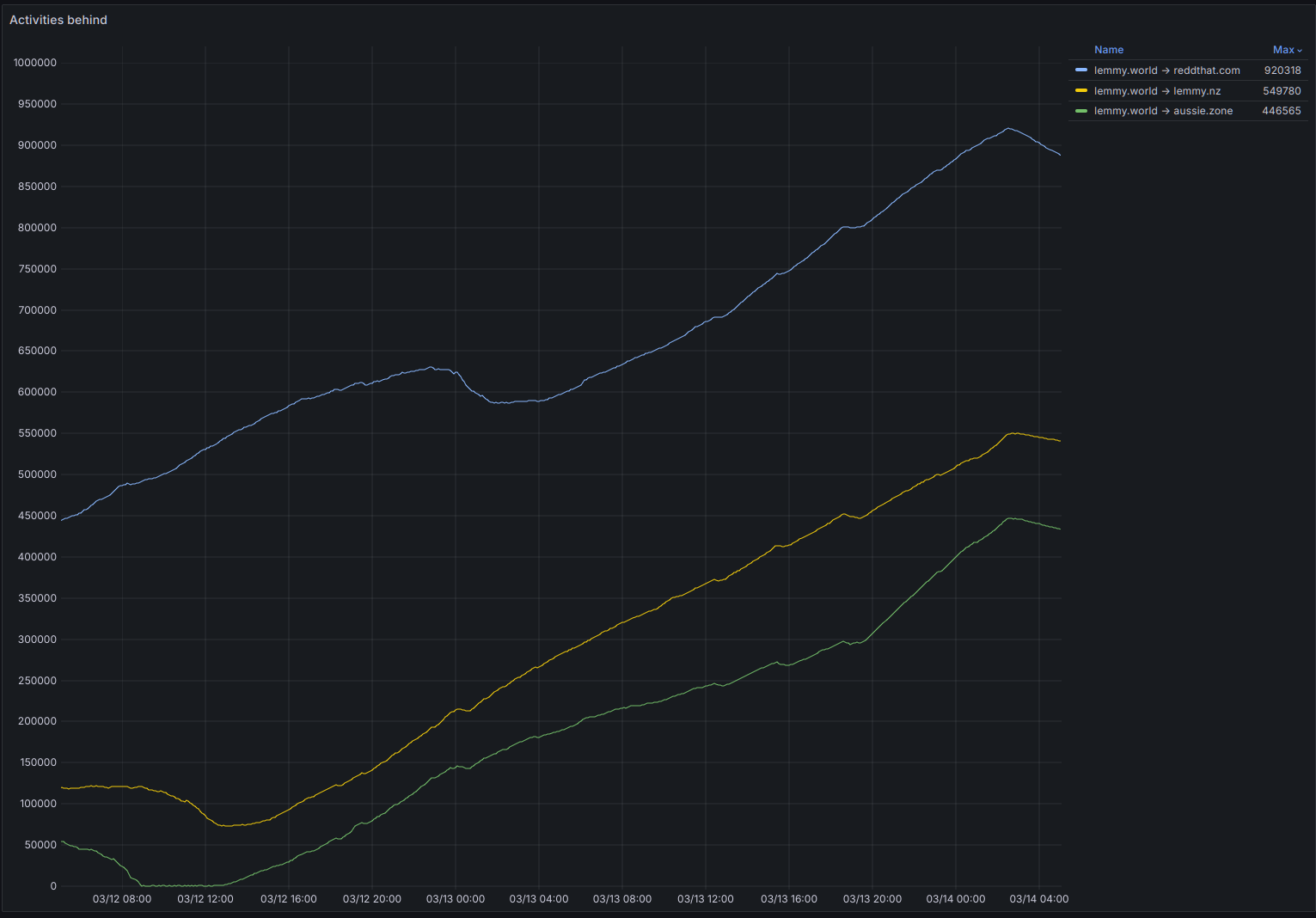

How far behind we are now:

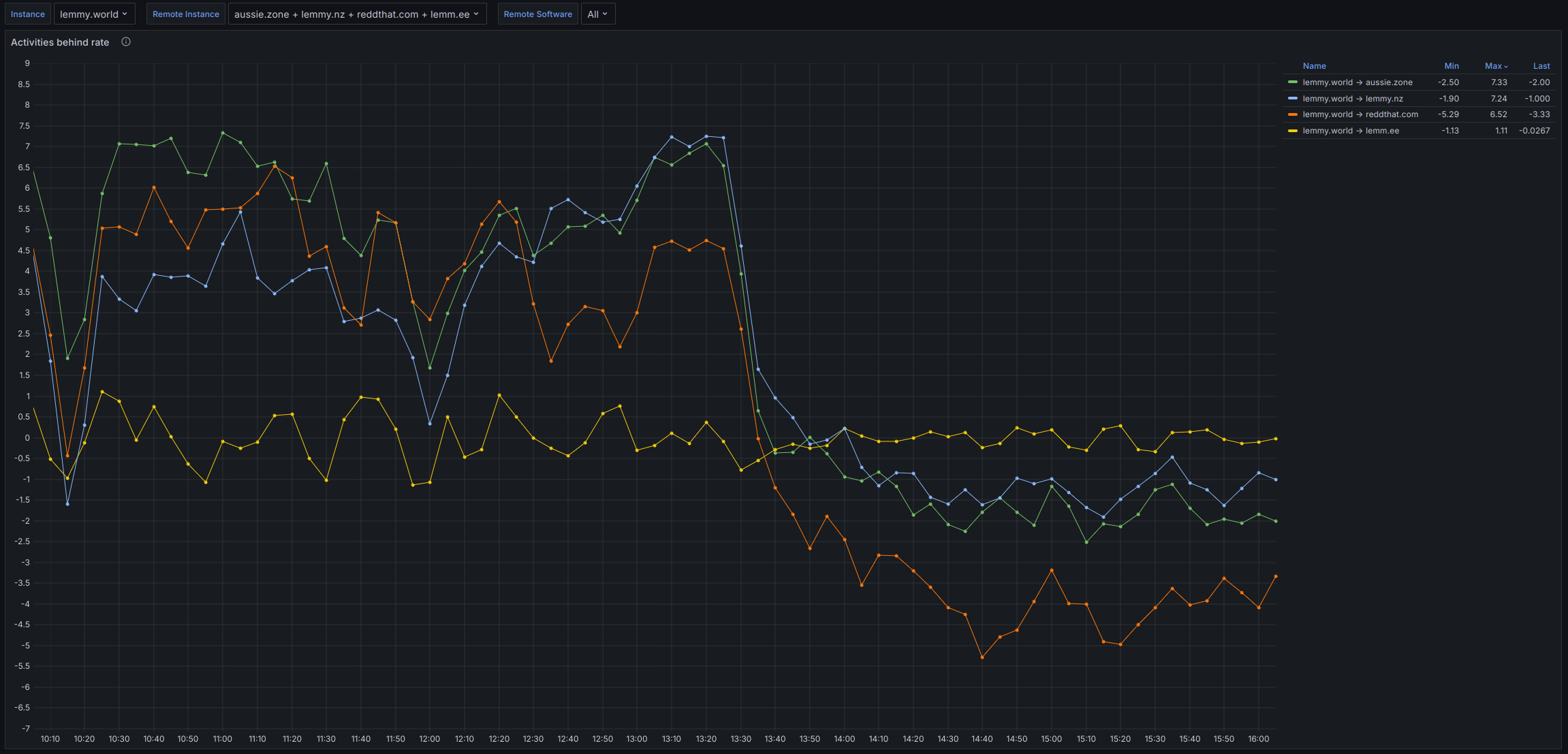

The rate at which activities are falling behind (positive) or if we are catching up (negative)