this post was submitted on 21 Dec 2025

946 points (98.4% liked)

Microblog Memes

9944 readers

3602 users here now

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

Rules:

- Please put at least one word relevant to the post in the post title.

- Be nice.

- No advertising, brand promotion or guerilla marketing.

- Posters are encouraged to link to the toot or tweet etc in the description of posts.

Related communities:

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

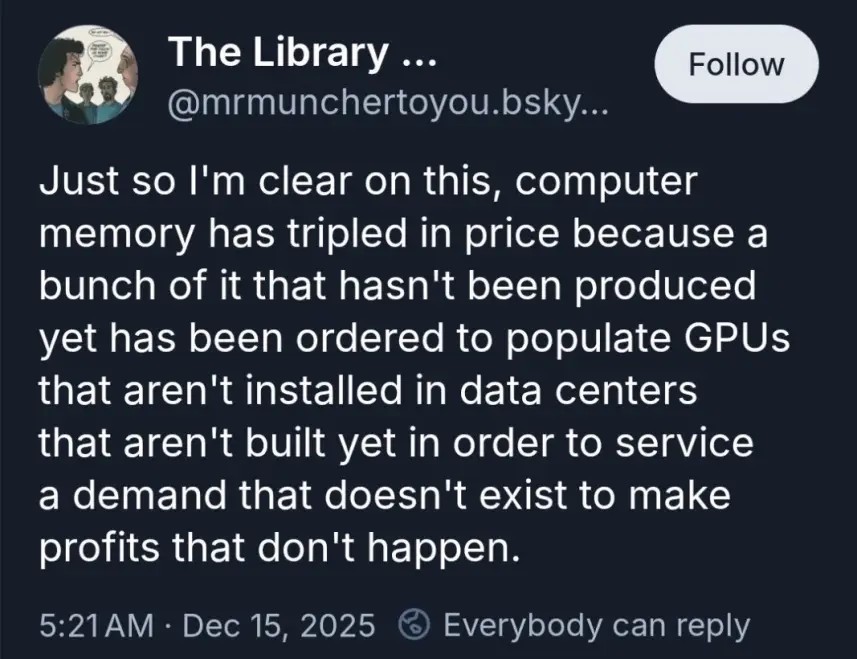

Microsoft and Nvidia have been trying for years to offload computing power to their own systems, while your computer becomes little more than a remote access terminal into this power when these companies allow you access to it.

See; Nvidia Now, Xbox Cloud Gaming, and pretty much every popular LLM (there are self-hosted options, but that's not the major market rn, or the direction it's headed)

There's ofc struggles there, that they have had a hard time over comming. Particularly with something like gaming, you need a low latency, high speed internet connection; but that's not necessary for all applications, and has been improving (slowly).

Actually open weights models have gotten better and better to the point they actually can compete meaningfully with ChatGPT and Claude Sonnet. Nvidia are actually one of the ones spearheading this with Nemotron. The issue is more that most of the really competent models need lots of VRAM to run. Small models lag quite far behind. Although with Nemotron Nano they are getting better.