Time to make our own gear i guess. Anyone got chip production skills?

Microblog Memes

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

Rules:

- Please put at least one word relevant to the post in the post title.

- Be nice.

- No advertising, brand promotion or guerilla marketing.

- Posters are encouraged to link to the toot or tweet etc in the description of posts.

Related communities:

I've got an air fryer, a deep fryer, a standard oven... Hell, I think I even have a spare potato lying around. Let's do this shit!

wrong chips, but fuck it - that'll do!

Uhh. Just chiming in here as someone that does business to business IT support.... Most of the NPC office workers are almost demanding access to "AI" stuff.

I'm not saying this will turn out well, in fact, I think it will probably end poorly, but I'm not on charge around here. There's a nontrivial outcry for "AI" tools in business.

There's profit happening with it right now. Maybe not enough to offset costs yet, but there's a market for these things in the mindless office drone space.

To be absolutely clear, I think it's an idiotic thing to have/use, especially for any work in IT, but here we are. I have middle managers quoting chat GPT as if it's proof that what they want, can be done. I've been forwarded complete instructions to use fictional control panels and fictional controls to do a thing, when it's not possible for that thing to be done.

"AI" is a corporate yes-man in the worst ways possible. Not only are they agreeing that something can be done, even if it's not possible, but it's providing real enough looking directions that it seems like what it's proposing can be done, is actually possible and reasonable. I once asked copilot how to drive to the moon and it told me I'd run out of gas. While I would definitely run out of gas trying to get to the moon by car, when I'm done trying and I've run out of gas, I wouldn't be any closer to the moon than I usually am.

The thing is an idiot on wheels at the best of times, and a blatant liar the rest of the time. I don't know how people can justify using it in business when a mistake can lead to legal action, and possibly a very large settlement. It's short sighted and it's not worth the time nor effort involved in the whole endeavor.

Simply because they can read the writing on the wall. Corporate made every single decision possible to signal "use AI or get fired." With mass layoffs being driven mainly by whole industries pivoting to AI, people are fighting desperately to stay relevant. Every pundit and tech guru is singing "learn AI or become unemployable." It is a strive for survival, not a heartfelt trust or belief on the tech. Hell, they might not even understand how it works, people just know they need it in their CV to keep their meager income coming.

As someone who works in a knowledge industry, anyone relying on AI for their workload will end up with more errors than solutions. IT requires a high degree of accuracy in the information you handle that gets you to a solution. Out of everything you can say about AI, you can't say that it's highly accurate.

Any time I've given a technical question to copilot or chat GPT, I usually get nonsense back. It will not help me solve any of the issues I need to solve as a part of my job.

I understand how the current version of "AI" works, and from that knowledge, I know that for any meaningful task I face with even a small amount of complexity, these so-called "AI" bots can't possibly have any relevant answers. Most of the time I can't find relevant answers on the Internet by trying. Sometimes I only get adjacent information that helps lead me to the unique solution I need to implement.

"AI" in IT support actually makes things go slower and cause more issues and frustration than actual tangible help with anything that needs to be done. You end up going down rabbit holes of misinformation, wasting hours of time trying to make an ineffective "solution" work, just because some "AI" chatbot sent you on a wild goose chase.

Does the LLM tech support ever actually work? I tried it many times, and it just creates garbage. Pre-written question-answer trees are more useful than this.

I've never seen something useful come out of an LLM when it comes to tech support.

For the most part AI is the best OCR ever designed. And if used for that it really is great. Most AI agents you see our there are mostly just used for that: ocr.

It's also nice-ish to start writing simple programs, if you know how it works it sets you more or less in the right path in a few prompts. That head start can be nice.

It also helps in Excel with charting.

It also is helpful for acquiring knowledge. AS LONG AS YOU CHECK THE LINKED SOURCES.

If you don't you will crash and burn. Not eventually but quick.

So yes, AI does have uses and Yes, it will cost some people their jobs, especially in knowledge Industries and IT.

But then again, that's a tale as old as time. Stuff changes.

(AI) datacenters will not go away. Desktop processing will vanish. And then, 15 years from now, someone gets a great idea and starts selling Personal AI computers. And this cycle will redo from start.

Machine learning for OCR makes a ton of sense. Human writing is highly dynamic, especially handwriting. It makes sense that OCR would benefit from a trained model for recognising words.

I can help:

That was the excuse for memory.

They're also stopping production on affordable desktop graphics cards that don't AI well.

Next up, They're going to stop making SSD and force the price of NVMe up.

They've yet to release that they're going to drive up the prices of CPU's, motherboards and power supplies.

AI companies have found ways to force consumers to use their products against their will. These hardware vendors no longer need to compete to get you to buy, if you use a search engine, an browser, a mail service or a major social network, you're using their stuff and the AI company buys it on your behalf. Since they can just charge you for that and you can't do anything about that price, your purchases no longer matter.

It's like food, cars, you know, everything but paychecks are going up in numbers.

We're pushing the gas pedal to a boring dystopia.

A.I. and big tech does not want you to have computing power to challenge their digital hegemony.

They will start pushing dumber and dumber devices and making development boxes so out of reach that only mega-wealth can afford to buy them.

Dumb devices will not be able to run shitty vibe coded OSes and apps. Your modern Android phones has orders of magnitude more computing power than 20 years old PDA despite having the same (or even less) functionality. Or even compared to 10 year old Android device. Software has been becoming slower and more bloated for decades, and it's only going to accelerate with "ai".

There will be more software restrictions and locked down "ecosystems" but I don't see the hardware becoming weaker. There is no going back.

I uninstalled google services and shit from a 60€ android phone and boom! Now stand-by battery life is 7 days and before it was 2~ days

Well yeah, if it's not doing anything the battery will last longer, yup.

Microsoft and Nvidia have been trying for years to offload computing power to their own systems, while your computer becomes little more than a remote access terminal into this power when these companies allow you access to it.

See; Nvidia Now, Xbox Cloud Gaming, and pretty much every popular LLM (there are self-hosted options, but that's not the major market rn, or the direction it's headed)

There's ofc struggles there, that they have had a hard time over comming. Particularly with something like gaming, you need a low latency, high speed internet connection; but that's not necessary for all applications, and has been improving (slowly).

Actually open weights models have gotten better and better to the point they actually can compete meaningfully with ChatGPT and Claude Sonnet. Nvidia are actually one of the ones spearheading this with Nemotron. The issue is more that most of the really competent models need lots of VRAM to run. Small models lag quite far behind. Although with Nemotron Nano they are getting better.

Software has been becoming slower and more bloated for decades and it's only going to accelerate with "ai".

This is mostly true, but a little misleading. (although the AI part is absolutely correct)

This is mostly a result of having more powerful hardware. When you're working with very limited hardware, you have to be clever about the code you write. You're incentivized to find trade-offs and workarounds to get past physical limitations. Computer history is filled with stuff like this.

Starting around the mid 90s, computer hardware was advancing at such a rapid pace that the goalposts shifted. Developers had fewer limitations, software got more ambitious and teams got larger. This required a methodology change. Code suddenly needed to be easier to understand and modify by devs who might not have a full understanding of the entire codebase.

This also had a benefit to the execs, where entirely unoptimized, or even sometimes unfinished code could be brought to market and that meant a faster return on investment.

Today we are seeing the results of that shift. Massive amounts of RAM and powerful CPUs are commonplace in every modern device, and code is inefficient, but takes up basically the same percentage of resources that it always has.

This change to AI coding is unavoidable because the industry has decided that they want development to be fast and cheap at the cost of quality.

The goal here isnt to have personal devices run the shitty vibe-coded apps, it's to lease time in datacenters to have them run it for you and stream it to your device for a monthly fee.

The goal here isnt to have personal devices run the shitty vibe-coded apps, it’s to lease time in datacenters to have them run it for you and stream it to your device for a monthly fee.

Sure but there are deep seated problems with this: (1) the shitty vibe coded apps are so bloated that they can't run their client side code without thick clients, (2) optimizing code is something nobody wants -- or in many cases knows how -- to do, and (3) Internet access is still spotty in many parts of the US and will likely stay that way due to other digital landlords seeking rent for fallow fields.

Yeah and the fucking FTC isn’t going to do anything about it.

We need Lina Kahn back.

For a purpose nobody wants, and will be used for mass surveillance and bleeding edge sentiment manipulation.

well hundreds of CEOs and billionaires want it. I know some that made AI their whole case to get the CEO job. Just not us normal 99% of the world

One nice thing about the current situation is we have a very effective and intuitive way to explain to gamers that capitalism is bad.

My favorite part is that even if these data centers get built, hardware to support LLMs is improving at a pretty fast rate due to the stupid amounts of money being burned on it.

This hypothetical hardware will be out of date by the time the data centers are ready for it, and they'll either be built with out of date tech or they'll blow past their budget due to actual differences in what it costs to make the up to date hardware vs what was planned.

My homelab welcomes the "outdated" or in need of maintenance chips coming my way.

Oh no I can't use the latest text generation tool and all I can do it crazy simulation, 3d model, and graphics work. What a shame. /s

That said, is it possible to get cast-off equipment from the likes of Amazon or Microsoft? I wouldn't mind some decom cloud stuff.

That's why they're making it expendable. Those chips are designed to survive no more than 5 years of service in data centers. An unprecedented low level of durability provisioning. They are intentionally making e-waste.

Well yes but it's not like this is the first time very expensive hardware was entirely expendable or even the first time it makes sense to do so. Look at the Saturn V. It cost something like a billion per rocket in today's money and each one could only be used once. You had to build a whole new rocket every time you wanted to go to the moon. That's just how things were with the technology available at the time. The funny thing is it was actually cheaper per launch than the Space Shuttle in the end despite the space shuttle being mostly reused/refurbished between launches.

Data center hardware has always had a limited lifespan due to new technology making it obsolete. Improvements in efficiency and performance make it cheaper to buy new servers than keep running old ones. I am pretty sure 5 or 6 years was already roughly the lifespan of these things to begin with. AI hasn't really changed that, only the scale has changed.

Comparing AI to the Saturn V is morally insulting. Sure, servers do have a lifespan. There's a science to the upgrade rate, and it was probably 5 years…back in the 90s. When tech was new, evolving fast and e-waste wasn't even a concept. Today, durability is measured in decades, which means provision is typically several decades.

There are many servers with chips from the last 20 years that could be spun today and would still work perfectly fine. They were furbished with proper cooling and engineered to last. In 2020 I worked in a data center where they still had a 1999 mainframe in production, purring away like a kitten and not a single bit less performant. It just received more network storage and new ram memory from time to time. This is not what is happening with AI chips. They are being planned to burn out and become useless out of heat degradation.

All based on the promise from NVIDIA of new chip's design breakthroughs that still don't exist for new models of LLMs that don't exist either. The problem is that, essentially, LLM tech has reached a pause in performance. More hardware, more data, more tokens, are not solving the problems that AI companies thought they would, and there's a development dead end where there's very few easy or simple improvements left to make.

Talking about a single mainframe lasting 20+ years is disingenuous given mainframes are not normal servers and inherently have a longer lifespan. Even then 20+ years is an exceptionally long operation for one of these, not because of hardware limitations, but because it normally does not make financial sense. Mainframes typically run legacy systems so they are the one place these kinds of financial rules don't apply.

The average operational lifespan of a server is still 5-6 years as it's always been. Some businesses are replaced every 3 years. A quick Google search would tell you that. That's not a limit inherent to the hardware, but simply how long they are warranted and deployed for in most instances. As I explained before it doesn't make sense to keep servers around for that long when more modern options are available. Saying they "would still work perfectly fine" doesn't really mean anything outside of the hobbyist and used server market.

LLMs haven't reached a pause in performance and they are only a category of AI models. If you actually kept track of advancements instead of sitting here whining about it you would have seen more has been happening in even just the last year then just throwing data and compute at the problem. I find your intentional ignorance to be morally insulting.

Lol, tell me you've never step inside a data center in your life without telling me.

Just because the US dominated market is wasteful and destructive doesn't mean it is like that everywhere. You buy a server today and the offerings will be the same CPUs that were available five years ago. Servers are mean, powerful beasts, and upgrades have been slow and incremental at best for almost two decades. While manufacturer guarantees might last 7 to 10 years, operators offer refurbishment and refreshment services with extended guarantees. A decade old server is not a rare sight in a data center, hell we even kept some old Windows servers from the XP area around. Also, mainframes are most definitely not legacy machinery. Modern and new mainframes are deployed even today. It is a particular mode and architecture quirk, but it is just another server at the end of the day. In fact, the z17 is an AI specialized mainframe that released just this year as a full stack ready made AI solution.

A business that replaces servers every 3 years is burning money and any sane CFO would kick the CTO in the nuts who made such a stupid decision without a very strong reason to do it. Though C suites are not known for being sane, it is mostly in the US that such kind of wastefulness is found. All this is from experience on the corporate IT side, not at all hobbyist or second hand market.

Yes I am well aware that modern mainframes exist, I am actually planning to get certified on them at some point. They are however a very niche solution, which you clearly should know, and often tasked to run software made decades ago. A mainframe from 1999 is not exactly modern.

If you legit are running Windows XP or Server 2003 then you are way out of government regulations and compliance and your whole team should be sacked immediately including you. Don't come on a public forum and brag about the incompetence of your whole organisation for fuck's sake. You just painted a target on your back. You clearly have no understanding of either cyber security or operational security doing things like that.

Which country's?

It is awfully priviledged and insulting to imply such horrible things and wish harm on others because of your xenophobia and limited experience with diverse contexts.

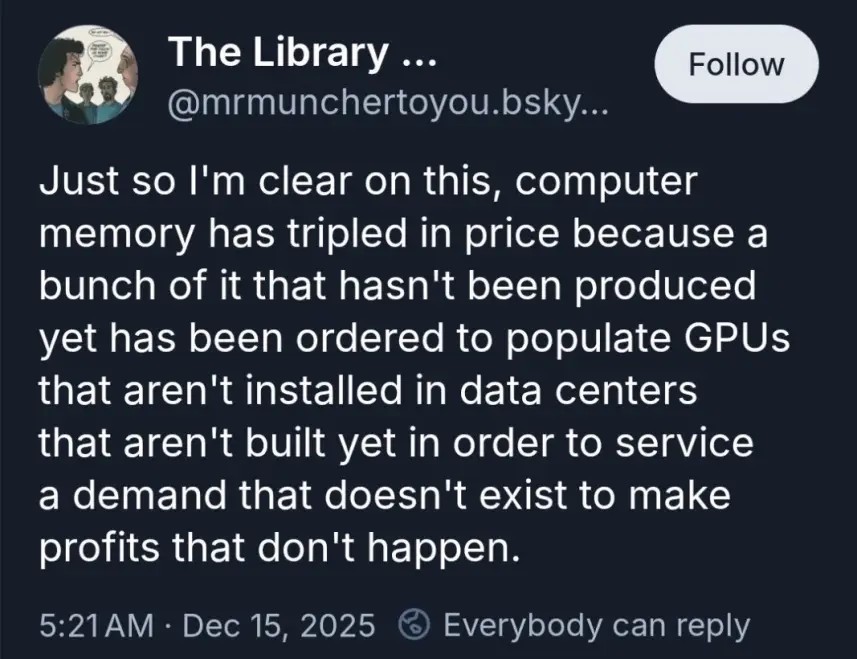

Correct. I will draw your attention to onion futures

Wow that was a fascinating read.

Tulip mania.

This actually isnt that weird, happens all the time

However, its less common that it impacts a common consumer product of the same type.

But a thing to be used in making a huge project causing prices to shoot up ahead of time is very normal.

Its just usually stuff like concrete, steel, lumber, etc that is impacted the most, but turns out RAM as a global industry wasnt ready to scale up to a sudden huge spike in demand.

Give it a couple yesrs and it'll level out as producers scale up to meet the new demand.

The producers have announced they will not increase production

Chinese factories are scaling to meet demand. The brands may not be the same but there will be new competition in the space because of the industry leaders abandoning a revenue stream.

It is happening to cars as well. Eventually you will only be able to rent a car and pay for each feature by the minute. Features such as brakes, lights, windshield wipers...